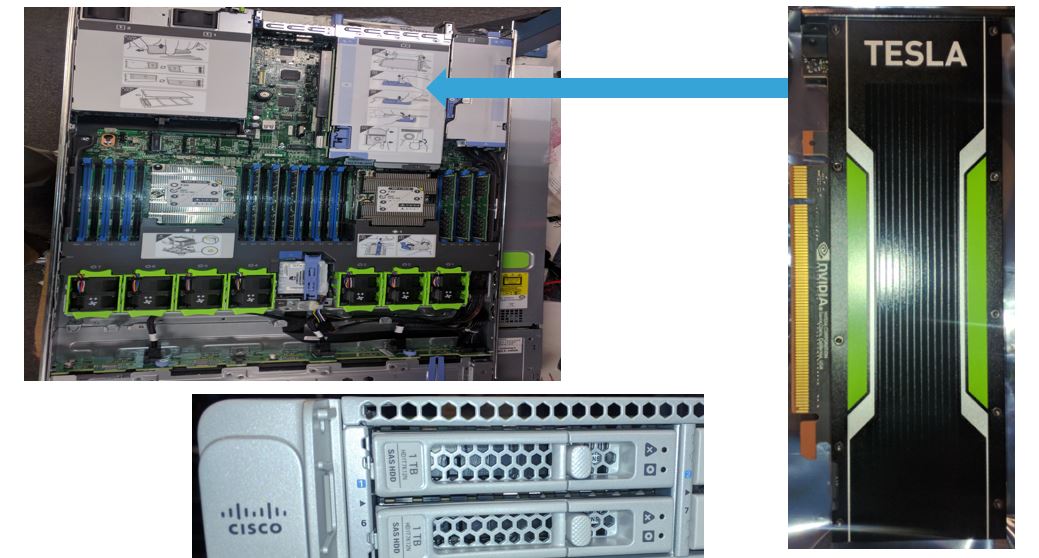

Building a Machine Learning platform using UCS 220 M5 with Tesla P4 GPU

by Wenwei Weng

Machine Learning has been a very hot field. Big players like Amazon, Google, they host machine learning infrastructure in the cloud, which is very nice thing, except it can be very expensive to use it. I’m always curious if I can build one by myself: a relative powerful machine learning platform. The following shows what I achieved using UCS220-M5 with Tesla-P4 GPU.

What is required hardware?

- CPU: Dual Intel Xeon 6132 @2.6 GHz, 28 cores/each

- GPU: Nvidia Tesla P4 (Compute Capability: 6.1)

- DDR4: 192 GB

- HDD: 2TB

Cisco UCS220-M5 is a good platform to start with!

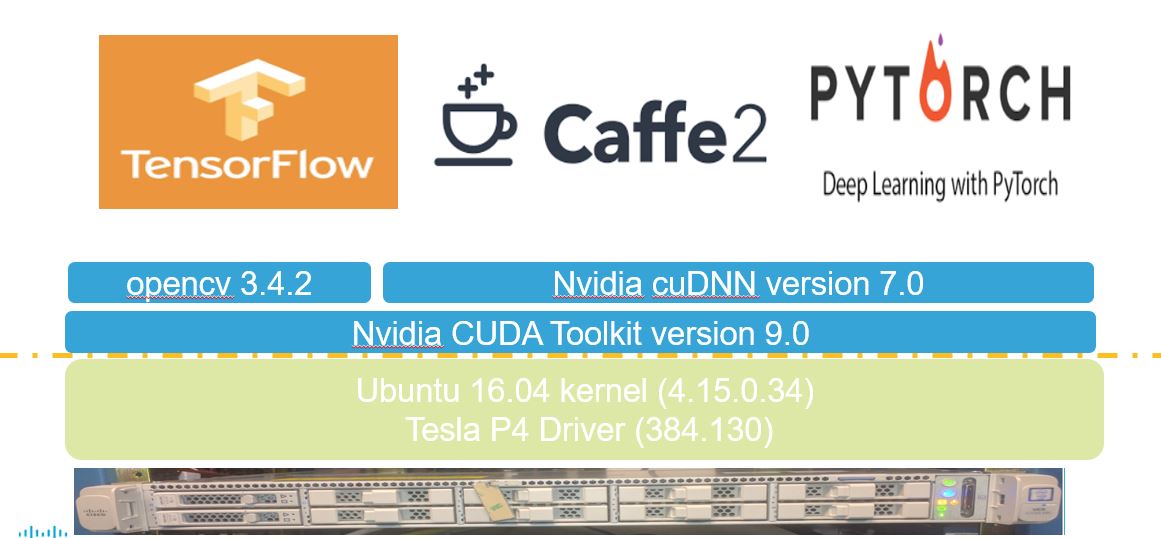

What is software stack to be built?

The following diagram shows the typical ML software stack. In my case, I build software stack from bottom-up, however top layer, I only build TensorFlow.

Step#1 Get ubuntu 16.04 box ready

iot@iotg-ml-2:~$ lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 16.04.5 LTS

Release: 16.04

Codename: xenial

iot@iotg-ml-2:~$ lspci | grep -i nvidia

d8:00.0 3D controller: NVIDIA Corporation Device 1bb3 (rev a1)

iot@iotg-ml-2:~$

iot@iotg-ml-2:~$ sudo apt-get update

[sudo] password for iot:

Hit:1 http://us.archive.ubuntu.com/ubuntu xenial InRelease

Hit:2 http://us.archive.ubuntu.com/ubuntu xenial-updates InRelease

Hit:3 http://us.archive.ubuntu.com/ubuntu xenial-backports InRelease

Hit:4 http://security.ubuntu.com/ubuntu xenial-security InRelease

Reading package lists... Done

iot@iotg-ml-2:~$ sudo apt-get install build-essential linux-headers-$(uname -r)

Reading package lists... Done

Building dependency tree

Reading state information... Done

build-essential is already the newest version (12.1ubuntu2).

linux-headers-4.15.0-29-generic is already the newest version (4.15.0-29.31~16.04.1).

linux-headers-4.15.0-29-generic set to manually installed.

0 upgraded, 0 newly installed, 0 to remove and 39 not upgraded.

iot@iotg-ml-2:~$ gcc --version

gcc (Ubuntu 5.4.0-6ubuntu1~16.04.10) 5.4.0 20160609

Copyright (C) 2015 Free Software Foundation, Inc.

This is free software; see the source for copying conditions. There is NO

warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

iot@iotg-ml-2:~/Downloads$ sudo apt-get install libncurses5-dev libncursesw5-dev

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following additional packages will be installed:

libtinfo-dev

Suggested packages:

ncurses-doc

The following NEW packages will be installed:

libncurses5-dev libncursesw5-dev libtinfo-dev

0 upgraded, 3 newly installed, 0 to remove and 39 not upgraded.

Need to get 450 kB of archives.

After this operation, 2,642 kB of additional disk space will be used.

Do you want to continue? [Y/n] y

Get:1 http://us.archive.ubuntu.com/ubuntu xenial/main amd64 libtinfo-dev amd64 6.0+20160213-1ubuntu1 [77.4 kB]

Get:2 http://us.archive.ubuntu.com/ubuntu xenial/main amd64 libncurses5-dev amd64 6.0+20160213-1ubuntu1 [175 kB]

Get:3 http://us.archive.ubuntu.com/ubuntu xenial/main amd64 libncursesw5-dev amd64 6.0+20160213-1ubuntu1 [198 kB]

Fetched 450 kB in 3s (140 kB/s)

Selecting previously unselected package libtinfo-dev:amd64.

(Reading database ... 220106 files and directories currently installed.)

Preparing to unpack .../libtinfo-dev_6.0+20160213-1ubuntu1_amd64.deb ...

Unpacking libtinfo-dev:amd64 (6.0+20160213-1ubuntu1) ...

Selecting previously unselected package libncurses5-dev:amd64.

Preparing to unpack .../libncurses5-dev_6.0+20160213-1ubuntu1_amd64.deb ...

Unpacking libncurses5-dev:amd64 (6.0+20160213-1ubuntu1) ...

Selecting previously unselected package libncursesw5-dev:amd64.

Preparing to unpack .../libncursesw5-dev_6.0+20160213-1ubuntu1_amd64.deb ...

Unpacking libncursesw5-dev:amd64 (6.0+20160213-1ubuntu1) ...

Processing triggers for man-db (2.7.5-1) ...

Setting up libtinfo-dev:amd64 (6.0+20160213-1ubuntu1) ...

Setting up libncurses5-dev:amd64 (6.0+20160213-1ubuntu1) ...

Setting up libncursesw5-dev:amd64 (6.0+20160213-1ubuntu1) ...

iot@iotg-ml-2:~/Downloads$ sudo apt-get install libelf-dev

...

iot@iotg-ml-2:~/Downloads$

iot@iotg-ml-2:~$ uname -a

Linux iotg-ml-2 4.15.0-29-generic #31~16.04.1-Ubuntu SMP Wed Jul 18 08:54:04 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

iot@iotg-ml-2:~$

iot@iotg-ml-2:~/Downloads$ sudo apt-get --purge remove nvidia-*

Reading package lists... Done

Building dependency tree

Reading state information... Done

Note, selecting 'nvidia-325-updates' for glob 'nvidia-*'

....

Package 'nvidia-opencl-icd-375' is not installed, so not removed

0 upgraded, 0 newly installed, 0 to remove and 39 not upgraded.

iot@iotg-ml-2:~/Downloads$ Step#2 Install Nvidia Driver for Tesla P4

From Nvidia site, Tesla P4 driver: https://www.nvidia.com/download/driverResults.aspx/112428/en-us TESLA DRIVER FOR LINUX X64

Version: 375.20 Release Date: 2016.12.9 Operating System: Linux 64-bit CUDA Toolkit: 8.0 Language: English (India) File Size: 72.37 MB New in Release 375.20 New Device Support P100 P4

Recommended CUDA version(s): CUDA 8.0

iot@iotg-ml-2:~/Downloads$ ls -l NVIDIA-Linux-x86_64-375.20.run -rw-rw-r– 1 iot iot 75886564 Nov 16 2016 NVIDIA-Linux-x86_64-375.20.run

Disable X display server:

iot@iotg-ml-2:~/Downloads$ sudo rm /etc/X11/xorg.conf

rm: cannot remove '/etc/X11/xorg.conf': No such file or directory

iot@iotg-ml-2:~/Downloads$

iot@iotg-ml-2:~/Downloads$ cat /etc/modprobe.d/blacklist-nouveau.conf

blacklist nouveau

options nouveau modeset=0

iot@iotg-ml-2:~/Downloads$ sudo update-initramfs -u

update-initramfs: Generating /boot/initrd.img-4.15.0-34-generic

Reboot into text mode (runlevel 3).

This can usually be accomplished by adding the number "3" to the end of the system's kernel boot parameters.

iot@iotg-ml-2:~/Downloads$ sudo rebootInstall driver from downloaded run file: failed!!!

iot@iotg-ml-2:~$ sudo service lightdm stop

[sudo] password for iot:

iot@iotg-ml-2:~$ cd ~/Downloads/

iot@iotg-ml-2:~/Downloads$ ls -l

total 1600552

-rwxr-xr-x 1 iot iot 97546170 Sep 26 22:44 cuda_8.0.61.2_linux.run

-rwxr-xr-x 1 iot iot 1465528129 Sep 26 22:45 cuda_8.0.61_375.26_linux.run

-rw-rw-r-- 1 iot iot 75886564 Nov 16 2016 NVIDIA-Linux-x86_64-375.20.run

iot@iotg-ml-2:~/Downloads$ chmod +x NVIDIA-Linux-x86_64-375.20.run

iot@iotg-ml-2:~/Downloads$ sudo ./NVIDIA-Linux-x86_64-375.20.run --no-opengl-files

Verifying archive integrity... OK

Uncompressing NVIDIA Accelerated Graphics Driver for Linux-x86_64 375.20.....

(

iot@iotg-ml-2:~/Downloads$ cat /usr/lib/nvidia/pre-install

#!/bin/sh

# Trigger an error exit status to prevent the installer from overwriting

# Ubuntu's nvidia packages.

exit 1

iot@iotg-ml-2:~/Downloads$local run file installation failed because this 16.04.5 is latest version with kernel 4.15.0, which is not compatible with installer file. Switch to use ubuntu repo version.

Install driver using apt-get, luckily, it succeeds!

iot@iotg-ml-2:~/Downloads$ sudo apt-get install nvidia-375 nvidia-modprobe

...

nvidia_384:

Running module version sanity check.

- Original module

- No original module exists within this kernel

- Installation

- Installing to /lib/modules/4.15.0-34-generic/updates/dkms/

nvidia_384_modeset.ko:

Running module version sanity check.

- Original module

- No original module exists within this kernel

- Installation

- Installing to /lib/modules/4.15.0-34-generic/updates/dkms/

nvidia_384_drm.ko:

Running module version sanity check.

- Original module

- No original module exists within this kernel

- Installation

- Installing to /lib/modules/4.15.0-34-generic/updates/dkms/

nvidia_384_uvm.ko:

Running module version sanity check.

- Original module

- No original module exists within this kernel

- Installation

- Installing to /lib/modules/4.15.0-34-generic/updates/dkms/

depmod............

DKMS: install completed.

Setting up libcuda1-384 (384.130-0ubuntu0.16.04.1) ...

Setting up libjansson4:amd64 (2.7-3ubuntu0.1) ...

Setting up libvdpau1:amd64 (1.1.1-3ubuntu1) ...

Setting up libxnvctrl0 (361.42-0ubuntu1) ...

Setting up mesa-vdpau-drivers:amd64 (18.0.5-0ubuntu0~16.04.1) ...

Setting up nvidia-375 (384.130-0ubuntu0.16.04.1) ...

Setting up nvidia-modprobe (361.28-1) ...

Setting up ocl-icd-libopencl1:amd64 (2.2.8-1) ...

Setting up nvidia-opencl-icd-384 (384.130-0ubuntu0.16.04.1) ...

Setting up bbswitch-dkms (0.8-3ubuntu1) ...

Loading new bbswitch-0.8 DKMS files...

First Installation: checking all kernels...

Building only for 4.15.0-34-generic

Building initial module for 4.15.0-34-generic

Done.

bbswitch:

Running module version sanity check.

- Original module

- No original module exists within this kernel

- Installation

- Installing to /lib/modules/4.15.0-34-generic/updates/dkms/

depmod....

DKMS: install completed.

Setting up nvidia-prime (0.8.2) ...

Setting up screen-resolution-extra (0.17.1.1~16.04.1) ...

Setting up nvidia-settings (361.42-0ubuntu1) ...

Setting up vdpau-driver-all:amd64 (1.1.1-3ubuntu1) ...

Processing triggers for libc-bin (2.23-0ubuntu10) ...

Processing triggers for initramfs-tools (0.122ubuntu8.11) ...

update-initramfs: Generating /boot/initrd.img-4.15.0-34-generic

Processing triggers for shim-signed (1.33.1~16.04.1+13-0ubuntu2) ...

Secure Boot not enabled on this system.

Processing triggers for ureadahead (0.100.0-19) ...

Processing triggers for dbus (1.10.6-1ubuntu3.3) ...

iot@iotg-ml-2:~/Downloads$Wow, it passed! Now let’s check if we can see Tesla-P4 device properly?

iot@iotg-ml-2:~/Downloads$ nvidia-smi

Thu Sep 27 11:30:37 2018

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 384.130 Driver Version: 384.130 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla P4 Off | 00000000:D8:00.0 Off | 0 |

| N/A 37C P0 23W / 75W | 0MiB / 7606MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

iot@iotg-ml-2:~/Downloads$ Very nice! we can see Tesla-P4.

As it turns out version 384.130 is installed, it means we can use cuda version 9.0 Based on: https://stackoverflow.com/questions/30820513/what-is-the-correct-version-of-cuda-for-my-nvidia-driver/30820690#30820690 CUDA 9.2:396.xx CUDA 9.1:387.xx CUDA 9.0:384.xx CUDA 8.0375.xx(GA2) CUDA 8.0:367.4x CUDA 7.5:352.xx

After driver is installed, I rebooted the box, and X window display is still working!

Step#3 Install Cuda version 9.0

iot@iotg-ml-2:~/Downloads$ sudo ./cuda_9.0.176_384.81_linux.run --no-opengl-libs

...

Install the CUDA 9.0 Samples?

(y)es/(n)o/(q)uit: y

Enter CUDA Samples Location

[ default is /home/iot ]:

Installing the CUDA Toolkit in /usr/local/cuda-9.0 ...

Missing recommended library: libGLU.so

Missing recommended library: libX11.so

Missing recommended library: libXi.so

Missing recommended library: libXmu.so

Installing the CUDA Samples in /home/iot ...

Copying samples to /home/iot/NVIDIA_CUDA-9.0_Samples now...

Finished copying samples.

===========

= Summary =

===========

Driver: Not Selected

Toolkit: Installed in /usr/local/cuda-9.0

Samples: Installed in /home/iot, but missing recommended libraries

Please make sure that

- PATH includes /usr/local/cuda-9.0/bin

- LD_LIBRARY_PATH includes /usr/local/cuda-9.0/lib64, or, add /usr/local/cuda-9.0/lib64 to /etc/ld.so.conf and run ldconfig as root

To uninstall the CUDA Toolkit, run the uninstall script in /usr/local/cuda-9.0/bin

Please see CUDA_Installation_Guide_Linux.pdf in /usr/local/cuda-9.0/doc/pdf for detailed information on setting up CUDA.

***WARNING: Incomplete installation! This installation did not install the CUDA Driver. A driver of version at least 384.00 is required for CUDA 9.0 functionality to work.

To install the driver using this installer, run the following command, replacing <CudaInstaller> with the name of this run file:

sudo <CudaInstaller>.run -silent -driver

Logfile is /tmp/cuda_install_2263.log

iot@iotg-ml-2:~/Downloads$Verify CUDA software is running fine by using the provided sample application device query:

iot@iotg-ml-2:~/NVIDIA_CUDA-9.0_Samples$ pwd

/home/iot/NVIDIA_CUDA-9.0_Samples

iot@iotg-ml-2:~/NVIDIA_CUDA-9.0_Samples$ ls

0_Simple 2_Graphics 4_Finance 6_Advanced bin EULA.txt

1_Utilities 3_Imaging 5_Simulations 7_CUDALibraries common Makefile

iot@iotg-ml-2:~/NVIDIA_CUDA-9.0_Samples$ make

...

...

...

iot@iotg-ml-2:~/NVIDIA_CUDA-9.0_Samples/bin/x86_64/linux/release$ ./deviceQuery

./deviceQuery Starting...

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 1 CUDA Capable device(s)

Device 0: "Tesla P4"

CUDA Driver Version / Runtime Version 9.0 / 9.0

CUDA Capability Major/Minor version number: 6.1

Total amount of global memory: 7606 MBytes (7975862272 bytes)

(20) Multiprocessors, (128) CUDA Cores/MP: 2560 CUDA Cores

GPU Max Clock rate: 1114 MHz (1.11 GHz)

Memory Clock rate: 3003 Mhz

Memory Bus Width: 256-bit

L2 Cache Size: 2097152 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 2048

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: No

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Enabled

Device supports Unified Addressing (UVA): Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 216 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 9.0, CUDA Runtime Version = 9.0, NumDevs = 1

Result = PASS

iot@iotg-ml-2:~/NVIDIA_CUDA-9.0_Samples/bin/x86_64/linux/release$ All looks cool so far!

Step#4 Install NVIDIA CUDA® Deep Neural Network library (cuDNN) version 7.1.4

Ref: https://medium.com/@zhanwenchen/install-cuda-9-2-and-cudnn-7-1-for-tensorflow-pytorch-gpu-on-ubuntu-16-04-1822ab4b2421

https://developer.nvidia.com/rdp/cudnn-archive Download cuDNN v7.1.4 (May 16, 2018), for CUDA 9.0 cuDNN v7.1.4 Runtime Library for Ubuntu16.04 (Deb) cuDNN v7.1.4 Developer Library for Ubuntu16.04 (Deb) cuDNN v7.1.4 Code Samples and User Guide for Ubuntu16.04 (Deb)

iot@iotg-ml-2:~/Downloads$ ls -l

total 3781372

-rwxr-xr-x 1 iot iot 97546170 Sep 26 22:44 cuda_8.0.61.2_linux.run

-rwxr-xr-x 1 iot iot 1465528129 Sep 26 22:45 cuda_8.0.61_375.26_linux.run

-rwxr-xr-x 1 iot iot 116529155 Sep 27 12:04 cuda_9.0.176.1_linux.run

-rwxr-xr-x 1 iot iot 72212159 Sep 27 12:04 cuda_9.0.176.2_linux.run

-rwxr-xr-x 1 iot iot 1643293725 Sep 27 12:04 cuda_9.0.176_384.81_linux.run

-rwxr-xr-x 1 iot iot 76500577 Sep 27 12:04 cuda_9.0.176.3_linux.run

-rwxr-xr-x 1 iot iot 77497173 Sep 27 12:04 cuda_9.0.176.4_linux.run

-rwxr-xr-x 1 iot iot 126202024 Sep 27 15:11 libcudnn7_7.1.4.18-1+cuda9.0_amd64.deb

-rwxr-xr-x 1 iot iot 116411616 Sep 27 15:11 libcudnn7-dev_7.1.4.18-1+cuda9.0_amd64.deb

-rwxr-xr-x 1 iot iot 4491360 Sep 27 15:11 libcudnn7-doc_7.1.4.18-1+cuda9.0_amd64.deb

-rwxrwxr-x 1 iot iot 75886564 Nov 16 2016 NVIDIA-Linux-x86_64-375.20.run

iot@iotg-ml-2:~/Downloads$ sudo dpkg -i libcudnn7_7.1.4.18-1+cuda9.0_amd64.deb

[sudo] password for iot:

Selecting previously unselected package libcudnn7.

(Reading database ... 221264 files and directories currently installed.)

Preparing to unpack libcudnn7_7.1.4.18-1+cuda9.0_amd64.deb ...

Unpacking libcudnn7 (7.1.4.18-1+cuda9.0) ...

Setting up libcudnn7 (7.1.4.18-1+cuda9.0) ...

Processing triggers for libc-bin (2.23-0ubuntu10) ...

iot@iotg-ml-2:~/Downloads$ sudo dpkg -i libcudnn7-dev_7.1.4.18-1+cuda9.0_amd64.deb

Selecting previously unselected package libcudnn7-dev.

(Reading database ... 221271 files and directories currently installed.)

Preparing to unpack libcudnn7-dev_7.1.4.18-1+cuda9.0_amd64.deb ...

Unpacking libcudnn7-dev (7.1.4.18-1+cuda9.0) ...

Setting up libcudnn7-dev (7.1.4.18-1+cuda9.0) ...

update-alternatives: using /usr/include/x86_64-linux-gnu/cudnn_v7.h to provide /usr/include/cudnn.h (libcudnn) in auto mode

iot@iotg-ml-2:~/Downloads$ sudo dpkg -i libcudnn7-doc_7.1.4.18-1+cuda9.0_amd64.deb

Selecting previously unselected package libcudnn7-doc.

(Reading database ... 221277 files and directories currently installed.)

Preparing to unpack libcudnn7-doc_7.1.4.18-1+cuda9.0_amd64.deb ...

Unpacking libcudnn7-doc (7.1.4.18-1+cuda9.0) ...

Setting up libcudnn7-doc (7.1.4.18-1+cuda9.0) ...

iot@iotg-ml-2:~/Downloads$

After successful installation, we can verify if it is working:

iot@iotg-ml-2:~$ cp -r /usr/src/cudnn_samples_v7/ .

iot@iotg-ml-2:~$ cd cudnn_samples_v7/

iot@iotg-ml-2:~/cudnn_samples_v7$ ls

conv_sample mnistCUDNN RNN

iot@iotg-ml-2:~/cudnn_samples_v7$ cd mnistCUDNN/

iot@iotg-ml-2:~/cudnn_samples_v7/mnistCUDNN$ ls

data error_util.h fp16_dev.cu fp16_dev.h fp16_emu.cpp fp16_emu.h FreeImage gemv.h Makefile mnistCUDNN.cpp readme.txt

iot@iotg-ml-2:~/cudnn_samples_v7/mnistCUDNN$ make clean && make

rm -rf *o

rm -rf mnistCUDNN

/usr/local/cuda/bin/nvcc -ccbin g++ -I/usr/local/cuda/include -IFreeImage/include -m64 -gencode arch=compute_30,code=sm_30 -gencode arch=compute_35,code=sm_35 -gencode arch=compute_50,code=sm_50 -gencode arch=compute_53,code=sm_53 -gencode arch=compute_53,code=compute_53 -o fp16_dev.o -c fp16_dev.cu

g++ -I/usr/local/cuda/include -IFreeImage/include -o fp16_emu.o -c fp16_emu.cpp

g++ -I/usr/local/cuda/include -IFreeImage/include -o mnistCUDNN.o -c mnistCUDNN.cpp

/usr/local/cuda/bin/nvcc -ccbin g++ -m64 -gencode arch=compute_30,code=sm_30 -gencode arch=compute_35,code=sm_35 -gencode arch=compute_50,code=sm_50 -gencode arch=compute_53,code=sm_53 -gencode arch=compute_53,code=compute_53 -o mnistCUDNN fp16_dev.o fp16_emu.o mnistCUDNN.o -LFreeImage/lib/linux/x86_64 -LFreeImage/lib/linux -lcudart -lcublas -lcudnn -lfreeimage -lstdc++ -lm

iot@iotg-ml-2:~/cudnn_samples_v7/mnistCUDNN$ ./mnistCUDNN

cudnnGetVersion() : 7104 , CUDNN_VERSION from cudnn.h : 7104 (7.1.4)

Host compiler version : GCC 5.4.0

There are 1 CUDA capable devices on your machine :

device 0 : sms 20 Capabilities 6.1, SmClock 1113.5 Mhz, MemSize (Mb) 7606, MemClock 3003.0 Mhz, Ecc=1, boardGroupID=0

Using device 0

Testing single precision

Loading image data/one_28x28.pgm

Performing forward propagation ...

Testing cudnnGetConvolutionForwardAlgorithm ...

Fastest algorithm is Algo 1

Testing cudnnFindConvolutionForwardAlgorithm ...

^^^^ CUDNN_STATUS_SUCCESS for Algo 0: 0.050176 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 1: 0.052224 time requiring 3464 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 2: 0.073728 time requiring 57600 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 7: 0.140288 time requiring 2057744 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 5: 0.189440 time requiring 203008 memory

Resulting weights from Softmax:

0.0000000 0.9999399 0.0000000 0.0000000 0.0000561 0.0000000 0.0000012 0.0000017 0.0000010 0.0000000

Loading image data/three_28x28.pgm

Performing forward propagation ...

Resulting weights from Softmax:

0.0000000 0.0000000 0.0000000 0.9999288 0.0000000 0.0000711 0.0000000 0.0000000 0.0000000 0.0000000

Loading image data/five_28x28.pgm

Performing forward propagation ...

Resulting weights from Softmax:

0.0000000 0.0000008 0.0000000 0.0000002 0.0000000 0.9999820 0.0000154 0.0000000 0.0000012 0.0000006

Result of classification: 1 3 5

Test passed!

Testing half precision (math in single precision)

Loading image data/one_28x28.pgm

Performing forward propagation ...

Testing cudnnGetConvolutionForwardAlgorithm ...

Fastest algorithm is Algo 1

Testing cudnnFindConvolutionForwardAlgorithm ...

^^^^ CUDNN_STATUS_SUCCESS for Algo 0: 0.041888 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 1: 0.046080 time requiring 3464 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 2: 0.073728 time requiring 28800 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 7: 0.133088 time requiring 2057744 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 5: 0.207904 time requiring 203008 memory

Resulting weights from Softmax:

0.0000001 1.0000000 0.0000001 0.0000000 0.0000563 0.0000001 0.0000012 0.0000017 0.0000010 0.0000001

Loading image data/three_28x28.pgm

Performing forward propagation ...

Resulting weights from Softmax:

0.0000000 0.0000000 0.0000000 1.0000000 0.0000000 0.0000714 0.0000000 0.0000000 0.0000000 0.0000000

Loading image data/five_28x28.pgm

Performing forward propagation ...

Resulting weights from Softmax:

0.0000000 0.0000008 0.0000000 0.0000002 0.0000000 1.0000000 0.0000154 0.0000000 0.0000012 0.0000006

Result of classification: 1 3 5

Test passed!

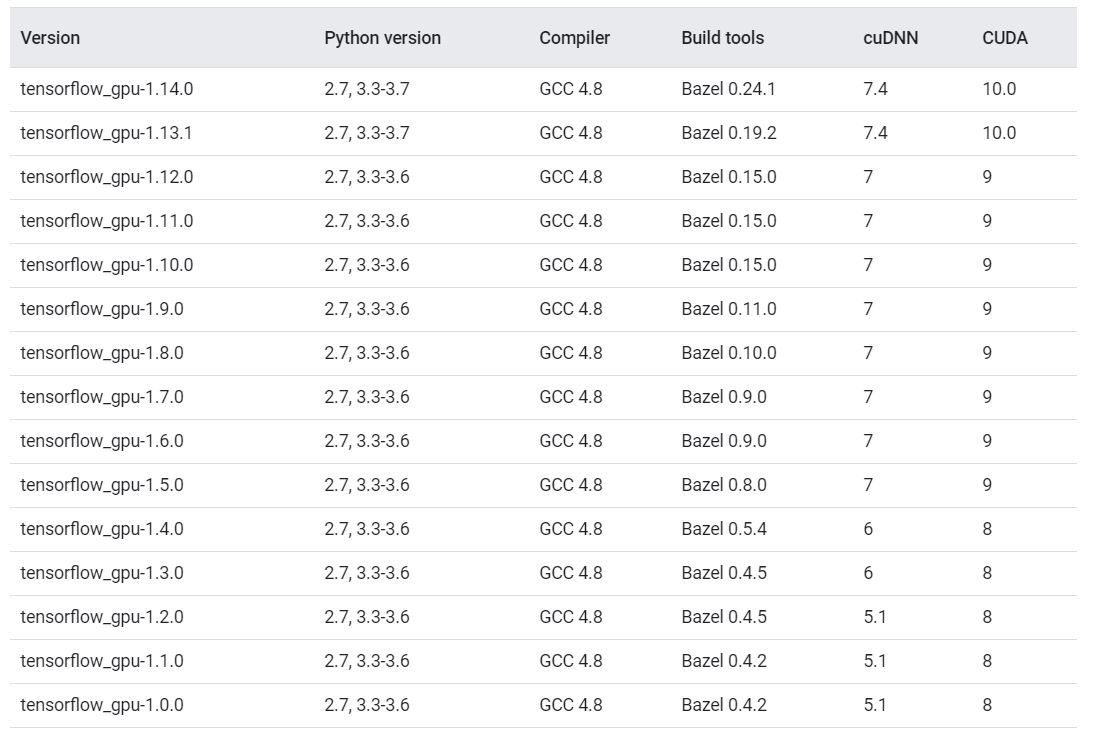

iot@iotg-ml-2:~/cudnn_samples_v7/mnistCUDNN$ Step#5 Install TensorFlow: tensorflow_gpu-1.10.0

Step#5.1 install bazel version 0.15.2 first

https://docs.bazel.build/versions/master/install-ubuntu.html

Using installer binary to install:

- sudo apt-get install pkg-config zip g++ zlib1g-dev unzip python

- https://github.com/bazelbuild/bazel/releases:

Step#5.2 install NCCL

https://developer.nvidia.com/nccl/nccl-legacy-downloads

iot@iotg-ml-2:~/Downloads$ sudo apt-key add /var/nccl-repo-2.2.13-ga-cuda9.0/7fa2af80.pub

OK

iot@iotg-ml-2:~/Downloads$ ls /usr/local/cuda/

bin extras jre libnsight nsightee_plugins nvvm samples src version.txt

doc include lib64 libnvvp nvml pkgconfig share tools

iot@iotg-ml-2:~/Downloads$ sudo dpkg -i nccl-repo-ubuntu1604-2.2.13-ga-cuda9.0_1-1_amd64.deb

(Reading database ... 224455 files and directories currently installed.)

Preparing to unpack nccl-repo-ubuntu1604-2.2.13-ga-cuda9.0_1-1_amd64.deb ...

Unpacking nccl-repo-ubuntu1604-2.2.13-ga-cuda9.0 (1-1) over (1-1) ...

Setting up nccl-repo-ubuntu1604-2.2.13-ga-cuda9.0 (1-1) ...

iot@iotg-ml-2:~/Downloads$

iot@iotg-ml-2:~/Downloads$ cd /usr/local/cuda

iot@iotg-ml-2:/usr/local/cuda$ sudo tar xvf ~/Downloads/nccl_2.2.13-1+cuda9.0_x86_64.txz

nccl_2.2.13-1+cuda9.0_x86_64/include/

nccl_2.2.13-1+cuda9.0_x86_64/include/nccl.h

nccl_2.2.13-1+cuda9.0_x86_64/lib/

nccl_2.2.13-1+cuda9.0_x86_64/lib/libnccl.so.2.2.13

nccl_2.2.13-1+cuda9.0_x86_64/lib/libnccl_static.a

nccl_2.2.13-1+cuda9.0_x86_64/lib/libnccl.so

nccl_2.2.13-1+cuda9.0_x86_64/lib/libnccl.so.2

nccl_2.2.13-1+cuda9.0_x86_64/COPYRIGHT.txt

nccl_2.2.13-1+cuda9.0_x86_64/NCCL-SLA.txt

iot@iotg-ml-2:/usr/local/cuda$ Step#5.3 Buid Tensorflow

https://www.tensorflow.org/install/source#tested_source_configurations

sudo apt-get install git python-dev python-pip sudo apt-get insall openjdk-8-jdk pip install -U –user pip six numpy wheel mock pip install -U –user keras_applications==1.0.5 –no-deps pip install -U –user keras_preprocessing==1.0.3 –no-deps

iot@iotg-ml-2:~/$ git clone https://github.com/tensorflow/tensorflow.git

iot@iotg-ml-2:~/$ cd tensorflow && git checkout r1.10

iot@iotg-ml-2:~/tensorflow$ ./configure

WARNING: Running Bazel server needs to be killed, because the startup options are different.

WARNING: ignoring http_proxy in environment.

WARNING: --batch mode is deprecated. Please instead explicitly shut down your Bazel server using the command "bazel shutdown".

You have bazel 0.15.0 installed.

Please specify the location of python. [Default is /usr/bin/python]:

Found possible Python library paths:

/usr/local/lib/python2.7/dist-packages

/usr/lib/python2.7/dist-packages

Please input the desired Python library path to use. Default is [/usr/local/lib/python2.7/dist-packages]

Do you wish to build TensorFlow with jemalloc as malloc support? [Y/n]: y

jemalloc as malloc support will be enabled for TensorFlow.

Do you wish to build TensorFlow with Google Cloud Platform support? [Y/n]: y

Google Cloud Platform support will be enabled for TensorFlow.

Do you wish to build TensorFlow with Hadoop File System support? [Y/n]: y

Hadoop File System support will be enabled for TensorFlow.

Do you wish to build TensorFlow with Amazon AWS Platform support? [Y/n]: y

Amazon AWS Platform support will be enabled for TensorFlow.

Do you wish to build TensorFlow with Apache Kafka Platform support? [Y/n]: y

Apache Kafka Platform support will be enabled for TensorFlow.

Do you wish to build TensorFlow with XLA JIT support? [y/N]: n

No XLA JIT support will be enabled for TensorFlow.

Do you wish to build TensorFlow with GDR support? [y/N]: n

No GDR support will be enabled for TensorFlow.

Do you wish to build TensorFlow with VERBS support? [y/N]: n

No VERBS support will be enabled for TensorFlow.

Do you wish to build TensorFlow with OpenCL SYCL support? [y/N]: n

No OpenCL SYCL support will be enabled for TensorFlow.

Do you wish to build TensorFlow with CUDA support? [y/N]: y

CUDA support will be enabled for TensorFlow.

Please specify the CUDA SDK version you want to use. [Leave empty to default to CUDA 9.0]: 9.0

Please specify the location where CUDA 9.0 toolkit is installed. Refer to README.md for more details. [Default is /usr/local/cuda]:

Please specify the cuDNN version you want to use. [Leave empty to default to cuDNN 7.0]: 7.1

Please specify the location where cuDNN 7 library is installed. Refer to README.md for more details. [Default is /usr/local/cuda]:

Do you wish to build TensorFlow with TensorRT support? [y/N]: n

No TensorRT support will be enabled for TensorFlow.

Please specify the NCCL version you want to use. If NCCL 2.2 is not installed, then you can use version 1.3 that can be fetched automatically but it may have worse performance with multiple GPUs. [Default is 2.2]:

Please specify the location where NCCL 2 library is installed. Refer to README.md for more details. [Default is /usr/local/cuda]:/usr/local/cuda/nccl_2.2.13-1+cuda9.0_x86_64

Please specify a list of comma-separated Cuda compute capabilities you want to build with.

You can find the compute capability of your device at: https://developer.nvidia.com/cuda-gpus.

Please note that each additional compute capability significantly increases your build time and binary size. [Default is: 6.1]

Do you want to use clang as CUDA compiler? [y/N]: n

nvcc will be used as CUDA compiler.

Please specify which gcc should be used by nvcc as the host compiler. [Default is /usr/bin/gcc]:

Do you wish to build TensorFlow with MPI support? [y/N]: N

No MPI support will be enabled for TensorFlow.

Please specify optimization flags to use during compilation when bazel option "--config=opt" is specified [Default is -march=native]:

Would you like to interactively configure ./WORKSPACE for Android builds? [y/N]: N

Not configuring the WORKSPACE for Android builds.

Preconfigured Bazel build configs. You can use any of the below by adding "--config=<>" to your build command. See tools/bazel.rc for more details.

--config=mkl # Build with MKL support.

--config=monolithic # Config for mostly static monolithic build.

Configuration finished

iot@iotg-ml-2:~/tensorflow$

iot@iotg-ml-2:~/tensorflow$ bazel build --config=opt --config=cuda //tensorflow/tools/pip_package:build_pip_package

...

Target //tensorflow/tools/pip_package:build_pip_package up-to-date:

bazel-bin/tensorflow/tools/pip_package/build_pip_package

INFO: Elapsed time: 233.016s, Critical Path: 179.72s

INFO: 949 processes: 949 local.

INFO: Build completed successfully, 952 total actions

iot@iotg-ml-2:~/tensorflow$ ./bazel-bin/tensorflow/tools/pip_package/build_pip_package /tmp/tensorflow_pkg

Thu Sep 27 17:52:45 PDT 2018 : === Preparing sources in dir: /tmp/tmp.WxMRTJEqdr

~/tensorflow ~/tensorflow

~/tensorflow

Thu Sep 27 17:53:02 PDT 2018 : === Building wheel

warning: no files found matching '*.dll' under directory '*'

warning: no files found matching '*.lib' under directory '*'

warning: no files found matching '*.h' under directory 'tensorflow/include/tensorflow'

warning: no files found matching '*' under directory 'tensorflow/include/Eigen'

warning: no files found matching '*.h' under directory 'tensorflow/include/google'

warning: no files found matching '*' under directory 'tensorflow/include/third_party'

warning: no files found matching '*' under directory 'tensorflow/include/unsupported'

Thu Sep 27 17:53:18 PDT 2018 : === Output wheel file is in: /tmp/tensorflow_pkg

iot@iotg-ml-2:~/tensorflow$ ls /tmp/tensorflow_pkg

tensorflow-1.10.1-cp27-cp27mu-linux_x86_64.whl

iot@iotg-ml-2:~/tensorflow$ file /tmp/tensorflow_pkg/tensorflow-1.10.1-cp27-cp27mu-linux_x86_64.whl

/tmp/tensorflow_pkg/tensorflow-1.10.1-cp27-cp27mu-linux_x86_64.whl: Zip archive data, at least v2.0 to extract

iot@iotg-ml-2:~/tensorflow$

sudo /home/iot/.local/bin/pip install h5py

sudo /home/iot/.local/bin/pip install keras

iot@iotg-ml-2:~/tensorflow$ sudo /home/iot/.local/bin/pip install tensorflow-1.10.1-cp27-cp27mu-linux_x86_64.whl Give a drive test!!!

iot@iotg-ml-2:~$ python

Python 2.7.12 (default, Dec 4 2017, 14:50:18)

[GCC 5.4.0 20160609] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow as tf

>>> mnist = tf.keras.datasets.mnist

>>> (x_train, y_train),(x_test, y_test) = mnist.load_data()

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz

11493376/11490434 [==============================] - 8s 1us/step

11501568/11490434 [==============================] - 8s 1us/step

>>> x_train, x_test = x_train / 255.0, x_test / 255.0

>>> model = tf.keras.models.Sequential([

... tf.keras.layers.Flatten(),

... tf.keras.layers.Dense(512, activation=tf.nn.relu),

... tf.keras.layers.Dropout(0.2),

... tf.keras.layers.Dense(10, activation=tf.nn.softmax)

... ])

>>> TensorFlow dependacy chart: https://www.tensorflow.org/install/source#tested_source_configurations (at the end of page)

Subscribe via RSS